Exploration Activity: MPEG Audio Coding for Machines (ACoM)

ISO/IEC JTC1 SG29 is currently

exploring whether a new activity

concerning "Audio Coding for Machines" (ACoM) should be started.

It is still possible to influence the MPEG work on that topic either by defining

use cases, requirements, or later by proposing technology. If you are just

interested in the existence of an interoperable standard for spatial acoustic

data an Expression of Interest would be helpful, too,

Currently the AdHoc Groups on

"Market Needs" of WP2 "Technical Requirements" and on "Audio Coding for Machines" are collecting information.

How to contribute?

There are several possibilities:

- If you want to support the activity by sending an expression of interest follow the instruction in

the public document on

Call for Interest.

The expression of interest should be sent to the chairman of WG02 Igor Curcio (email is sufficient). A template for such a letter can be found here .

- If you are an MPEG Member you can use the

Template of MPEG WG2 (Technical Requirements) on "Market & practical considerations (Doc. w20949)"

and submit an individual input for the next meeting via

https://dms.mpeg.expert/ . Please select WP2 and AdHoc Group "Market Needs".

As an MPEG member you might also want to subscribe to the

"market needs" and/or mpeg-acom mailing lists. For URLs see output documents of WG2 of thei

last MPEG meeting (password protected).

- If you are not an MPEG Member you can sent me an email to Thomas.Sporer (at) idmt.fraunhofer.de directly.

- Separate documents for different use cases and different contributors are possible.

MPEG 146 will be running from 2024-04-22 to 2024-04-26 in Rennes.

Resources and additional information

(public) output document from MPEG 145 (Online).

Use Cases and Requirements for Audio Coding for Machines (2023-04-30)

(public) output document from MPEG 142 (Antalya) (OLD).

Use Cases and Requirements for Audio Coding for Machines (2023-04-30)

Background:

The following section is an introduction to the topic written before

March 2023. Some information listed below might be outdated. However, the

text gives some indication about the ideas behind "Audio Coding for Machines".

Key ideas

All standards created by MPEG Audio had been optimized for human perception.

A key component of MPEG Audio encoders is a model of human perception:

the output of a coding scheme is regarded as good if listeners perceive

it as good. That way irrelevant information is deleted and/or the error made

by the data reduction is shaped in a way it is not audible.

When computer programs are used to analyze acoustic scenes lossy audio coding

might not be adequate. In contrast to human listeners, which can adapt quickly

to the recording situation, computer listening benefits from additional

information about the recording situation (metadata).

Therefore, audio data formats which are (near-)lossless and can transport

metadata necessary for machine listening are needed. The concerning audio

data should provide the possibility to store spatial audio data, i.e., from

measurements around a machine. Unlike AES 69

"Spatially Oriented Format for Acoustics" (SOFA) ACoM should not just store

impulse responses but longer time series of spatial recordings.

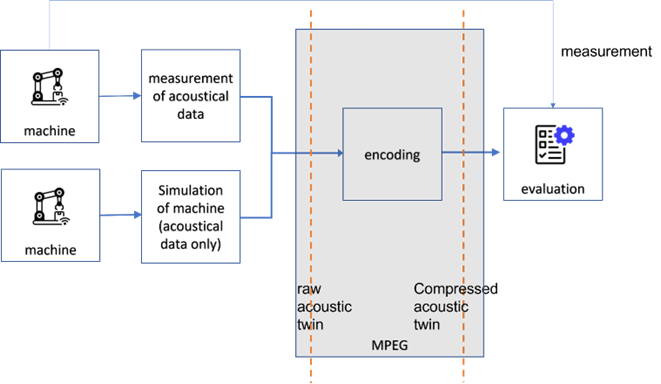

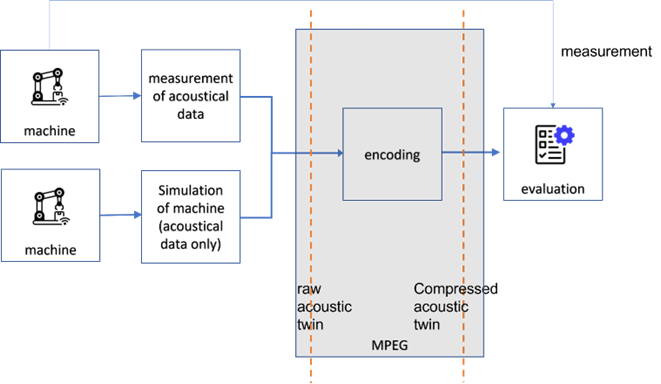

It is expected that ACoM will have different phases:

Phase 0: A lossless format focusing on metadata

("raw acoustic twin" in the figure 1).

Phase 1: A (near-)lossless format including metadata. This format

is applications agnostic but might not achieve the best possible compression

rate ("compressed acoustic twin" in the figure 1).

Phase 2: Further reduction of bitrate from phase 1 by coding of acoustic features.

This results in better compression rate but different feature sets for different

applications are needed (not shown in figure 1).

Fig.1 - Example: ACoM in industrial an application:

A machine in operation is either measured or simulated creating an

"acoustic twin". Data size

is reduced by MPEG ACoM coding to create the "compressed acoustic twin" files.

Many of such files are used to train an evaluation algorithm (not in scope

of MPEG). The evaluation algorithm is used to compare measured acoustic data

of a machine to compare with learned operation status.

Fig.1 - Example: ACoM in industrial an application:

A machine in operation is either measured or simulated creating an

"acoustic twin". Data size

is reduced by MPEG ACoM coding to create the "compressed acoustic twin" files.

Many of such files are used to train an evaluation algorithm (not in scope

of MPEG). The evaluation algorithm is used to compare measured acoustic data

of a machine to compare with learned operation status.

Applications

For the following applications the collection of requirements has been started.

- Industrial applications

- Prediction of noise exposure

- Traffic measurement

- Construction site monitoring

- Speech Recognition and acoustic scene analysis

- Storage of timed medical data

- ...

Publicly Available Documents

Currently two MPEG Working Groups (WG) have published documents concerning

applications and requirements.

WG2 "Requirements" has published Draft Use Cases and Draft Requirements on Audio Coding for Machines (ACoM)

(password protected)

WG6 "Audio" has published Thoughts on Use Cases and Requirements for Audio Coding for Machines (ACoM)

(password protected)

© 2024 Thomas Sporer, Kieler Str. 7A, 90766 Fürth, Thomas.Sporer(at)idmt.fraunhofer.de